About me

I am currently a Computer Science PhD candidate at Purdue University, advised by Professor Voicu Popescu. I am interested in extended reality (XR), especially haptics for virtual reality (VR). My take on the future of VR haptics is an immersive world in which the user can touch any virtual object within their reach—a lot like in the Ready Player One movie. I have taken a first step in this direction: I designed and built an Encountered-Type Haptic Device (ETHD) in the form of a Cartesian robot. I am also a big fan of wearable haptic devices, on which I plan to work in the immediate future. I am also interested in other XR fields, such as cloud VR and collaborative augmented reality (AR). Here is my CV.

Research projects

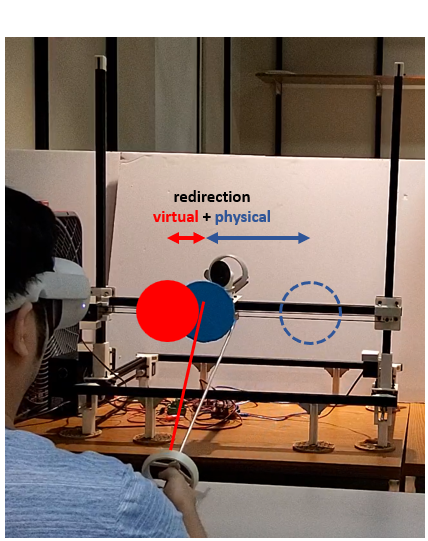

VR haptics with ETHD

One can allow the user to touch any virtual object by building a high-fidelity physical replica of the virtual environment—but that is impractical. A better approach is to try to alleviate the differences between the virtual and the physical world. One option is to change the physical world to align it locally with the part of the virtual world with which the user is about to interact. For this I built an ETHD, a Cartesian robot that moves a physical object to align it with the virtual object the user is about to touch. Another option is to change the virtual world to align it with the physical world. I have developed physical and virtual redirection algorithms that cooperate to allow the user to touch stationary or dynamic virtual objects of varying shapes with perfect synchronization of the virtual and physical contacts. This provides the user with safe and believable haptic feedback. The image shows my ETHD providing haptic feedback to the user.

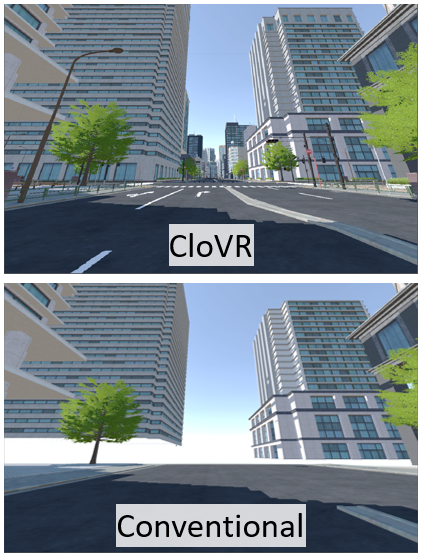

CloVR: Fast-Startup Low-Latency Cloud VR

We now have amazing self-contained XR headsets with on-board tracking, rendering, and display, delivering to the user completely untethered VR experiences. One challenge is to increase the complexity of the virtual environments that can be rendered on these headsets. I am working on a distributed VR system, dubbed CloVR, which partitions the rendering load between the server and the client, shielding the client from the full complexity of the virtual environment. The images show how CloVR downloads a visually complete and functionally virtual environment much faster than a conventional download of visible objects.

AR Attention Guidance in Co-Located Collaboration

Consider two collaborators, e.g., an instructor and a student, standing side-by-side, looking at the same 3D scene. I developed a method that allows the instructor to point out an element of the scene to the student using tablets or phones. The instructor circles the target on their display and a circle appears around the target on the student's display. Then the student's display turns transparent, i.e., the live video it shows grows similar to what the student would see if the display were actually made of glass. The transparent display gives to the student accurate directional guidance to the target, helping them find it with the naked eye. The image shows the transparent student display pointing to a window of the far away building.